Blogs

The best QA testing practices are still in demand amongst many organizations. QA teams are always looking to develop the best strategy to improve efficiency and deliver top quality products to stakeholders.

Testing teams, from mobile to software to CMS website, in various projects are adopting the Agile process. While the Agile methods have helped in improving the profile of testers in many ways, they have also raised questions. The questions are specifically about being the most effective in short sprints to deliver a quality product.

At Argil DX, we emphasize on client-focused delivery wherein we study the client’s business requirements and decide the best QA testing practices for agile software development.

Let’s start with a look at some of the best QA testing practices enabling us to deliver quality software products to clients. These practices can be effective in helping your team deliver quality products.

1. Communication

A general challenge every QA team faces is maintaining flawless communication between all the parties involved in a project, i.e. developers, management and customers. An effective communication with the help of a process to follow and maintain helps in productive collaboration. Simple examples of communication include creating a model ticket, establishing code review procedures and clearing labeling schemes. Most teams fail to make proper use of available tools to have an effective communication. This in turn raises issues like sudden changes in the code or a minor change in the requirement that wasn’t documented or mentioned in the user story.

Tickets in Pivotal Tracker or Defect Management Tools like JIRA are fundamental part of the best QA testing practices at Argil DX — we use them all day, every day. Whether you’re describing a bug or a feature, ensure that the ticket you create is described in a detailed and easily understandable manner.

It’s the ticket creator’s job to give the exact details necessary to address the ticket – the title or summary, the body or description, the steps to reproduce the labels if required. It should clearly state the problem and/or the expected outcome.

When creating a ticket, you can use sub-tasks if you feel that it’ll be helpful in breaking up the main task to create smaller tasks that are easier to achieve. Remember, never create an incomplete ticket. Sometimes, tickets are prepared hurriedly and on the go during a meeting or sanity testing and require more descriptions to be added later. That’s OK. However, it’s a common scenario where the description section is simply skipped over because it is assumed the title will infer the outcome.

Every time you create a ticket ask yourself the following questions before completing.

a. Will someone else easily understand this ticket?

b. Did you provide the detailed information and your own insight?

c. Could you easily give this ticket to a QA team member from another project and would he or she still be able to check it without any extra explanation?

If you answer these questions with an emphatic yes, especially the last one, then it’s a good sign that the ticket is ready for the world.

Lastly and most importantly, always keep your tickets up to date. Always add information about changes in the project, even if they are small ones. For example, if you decide to change the content, mention it. Remember your QA pays attention to detail and after noticing the updates, they will decide if the particular changes were intended or not. If a new mockup is ready, always replace the outdated file with the latest version.

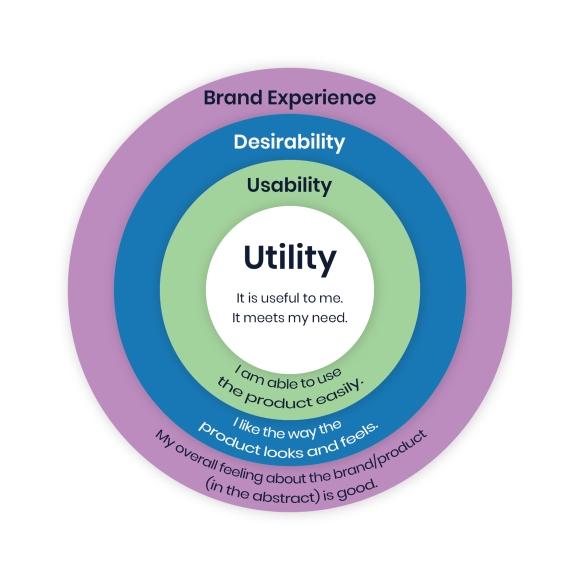

2. Testing to meet the usability or UX standards your customers expect

We believe in usability testing and like to find even the simplest of usability flaws in a software. However, on looking closer we find that the software was delivered by the development team without understanding the requirements. The issue is then classified as “Worked as designed.”

This is where we must communicate more with our clients and understand what the best user experience will be, by keeping in mind the type of users who will be using the software and some day-to-day activities conducted on that software/website/application.

The most common thing in all top-notch testers is their laser-like focus on the user experience. It’s far too easy for testers to get lost in the weeds of test cases and forget about the actual end user, however this is a fatal mistake.

We’ll never be able to catch every weird, obscure bug, but there are always some design elements where they tend to lurk. By focusing our testing efforts on some of the following areas — or at least not neglecting them — we can catch more issues before our customers do:

a) Too many fields on a single page:

Your end users are probably doing multiple things at once, so if you give them more than 10-15 fields to enter before they can save, it is an issue. You can suggest using a multipage form or a method for the user to save a transaction in a temporary state. The idea is to create more user-friendly design and workflow of that module.

b) Authoring experience in CMS website:

So many companies have a separate team of content authors, and they are always putting content into the website daily. In scenarios like these, the content authors become your end users. It becomes imperative for any QA team member to put themselves into their shoes while testing a component and suggesting a change in the authoring experience. The authoring of any page or component should be easy to understand and the most important fields or options to be highlighted first and then user should move to the less important authoring fields.

c) User journey in a software, website or application:

This is a very important area of focus while testing any product as this is the foundation on which user experience or usability was introduced in the world of QA. There are many researches and reports of applications with good concept and idea failing in the market because of bad user experience caused by an unsatisfactory user journey. The user journey should be extremely simple and easy to understand with desired results displayed at every step of the way. Otherwise, the user gets exhausted because they are just selecting options and nothing relevant to their search is displayed.

3. Build an exciting framework of testing approach:

QA team can come up with new testing strategy in the agile world of short sprints to deliver the quality product. Some of the testing approaches can be:

a) QA team should develop a healthy relationship with Business Analysts.

b) Ensure that every user story is specific to what you want to tell the user. It should also be testable and include an acceptance criteria.

c) Don’t ignore non-functional testing such as load, performance and security testing. Make sure that we do both functional and non-functional testing from the very start of the project.

d) Build meaningful end-to-end test scenarios by utilizing trends, data and analytics from the existing product to gather information about user activities and user journeys through the application.

e) Build a strong testing/QA practice which drives development. Define an Agile QA Testing Strategy and adopt tools which have a good amount of customization available for the need of your project.

f) Conduct regular QA workshops within the team where the testers can improve their technical skills as well as soft skills.

g) Take advantage of technical architecture diagrams, models of the application and mind maps to implement suitable test techniques.

h) Embed QA within the teams with appropriate owners, so that they are aware of any changes to the application.

4. Implement SBTM – Session Based Testing Management:

SBTM: Session-Based Test Management – It is “a method for measuring and managing exploratory testing.” In a nutshell, it is a Test Management Framework which is made for effective exploratory testing and finding results without executing test cases.

Exploratory testing is always unscripted, unrehearsed testing. Its effectiveness purely depends on several intangibles: the skill of the tester, their intuition, experience, and ability to follow hunches and look for unexplored areas. It’s these intangibles that often confound test managers when it comes to being accountable for the results of the exploratory testing performed.

For e.g. at the end of the day, when a team lead or manager asks for the status from an exploratory tester, they may get an answer like “Oh, you know… I tested some modules here and there, just looking around as of now.” Even though the tester may have filed several bugs, the manager might have no idea what they did to find them. If the lead or manager was skilled enough to ask the right questions about what the tester did, the tester may have forgotten the details or may not be able to describe their finding in a quantifiable manner.

For this very problem, there is a framework named Session Based Test Management (SBTM). This framework can be used in the form of a template (Session Metrics – Derived from the sessions performed) which will document the effort spent on the exploratory testing. This documentation is named as session metrics which are the primary means to express the status of the exploratory test process. This contains the following elements:

- Number of sessions completed or rounds of testing performed

- Number of problems or issues found

- Functional areas covered such as the modules, pages or components

- Percentage of session time spent on setting up for testing, which is the average time of setting up the test data and test environment if any authoring or content sync is required

- Percentage of session time spent testing which is the actual time spent on testing the mentioned modules, components, etc.

- Percentage of session time spent investigating problems which is the investigation time spent on each bug or an issue

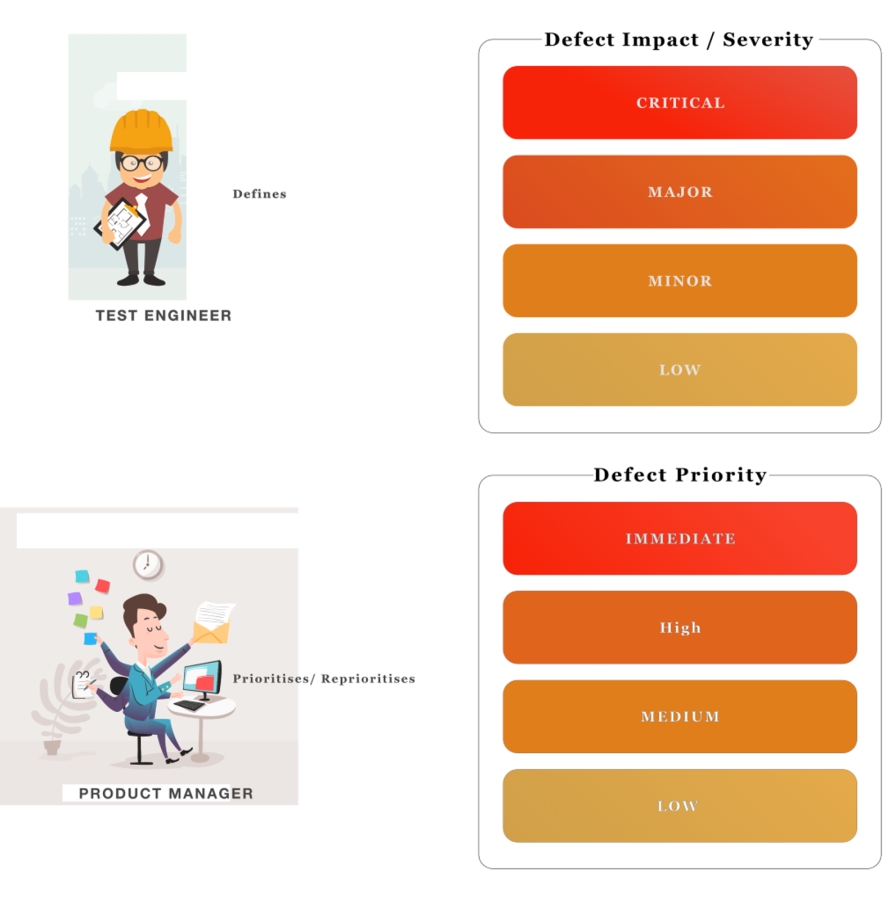

5. Effective use of severity and priority:

Defect tracking is one of the most important aspects of the defect lifecycle. This is important because the test teams open several defects when testing a piece of software, application or website which will only multiply if the system under test is complex. In these scenarios, managing the defects and analyzing these defects to drive closure can be a formidable task.

It is a useful practice to add Severity & Priority to each of the bugs entered in the system for a project. It helps all the stakeholders to track and maintain the bugs and prioritize the fixes accordingly.

However, this is a very confused concept and almost used interchangeably by test teams as well as development teams. There’s a fine line between the two (Severity & Priority) and it’s important to understand that there are indeed differences between the two.

Let’s have a quick look at how these two differ from each other.

“Priority” is associated with scheduling the fix, and “severity” is associated with standards.

“Priority” signifies that something is important and deserves to be attended to before others.

“Severity” is the state of being marked by strict adherence to rigorous standards or high principles.

The words priority and severity always come up in bug tracking. Determining the severity of a bug helps the development team to prioritize the bugs to be fixed. The priority status is often used by the product owners to determine which bug needs attention before going live.

You can find a range of software tools for commercial, problem tracking or management. These tools, with the detailed input of software test engineers, give the team complete information so that the developers can understand the bug, get an idea of its ‘severity’, reproduce it and fix it.

The fixes are based on project ‘priorities’ and ‘severities’ of bugs.

The ‘severity’ of a problem is defined in accordance with the customer’s risk assessment and recorded in their selected tracking tool.

A software that contains bugs can affect your release schedules leading you to reassess and renegotiate the project priorities.

6. Defining best test strategy and test planning

This is undoubtedly the most important of the best QA testing practices that every QA team should perform regardless of the type of project. There are standard ways to define Test strategy and Test plan that most companies are using to mention the pointers in the document and then dumping the same in the repository and never referring to the same.

We should not only define the Test strategy and Test plan document but also review it constantly with our clients and update them according to the different phases of the project.

How we like to use Test plan document is:

- Master test plan: A high-level test plan for a project or product that unifies all other test plans or contains all the information about the project and gets updated on regular basis. It’s basically like an encyclopedia of the project from testing perspective.

- Testing level specific test plans/Low level test plans: Plans for each level of testing or each module of the project.

– Unit Test Plan

– Integration Test Plan

– System Test Plan

– Acceptance Test Plan

– Story/Module Test plan - Testing type specific test plans: Plans for major types of testing like Performance Test Plan and Security Test Plan and Regression Test Plan.

Test Plan Guidelines

1. Make the plan concise. Avoid redundancy. If you contemplate that you do not need a section that has no use in your project, go ahead and delete that section from your test plan.

2. Be specific to every detail you provide in the test plan. For e.g., when you specify an operating system as a property of a test device, mention the OS Edition/Version as well, not just the OS Name.

3. Make good use of lists and tables wherever possible. Avoid lengthy paragraphs and convert your information into bullet points.

4. Make sure that the test plan is reviewed by all the stakeholders several times prior to baselining it or sending it for approval. The quality of your test plan will entail the quality of the testing you or your team are going to perform.

5. Update the plan as and when required. An outdated and unused document is worse than not having the document in the first place.

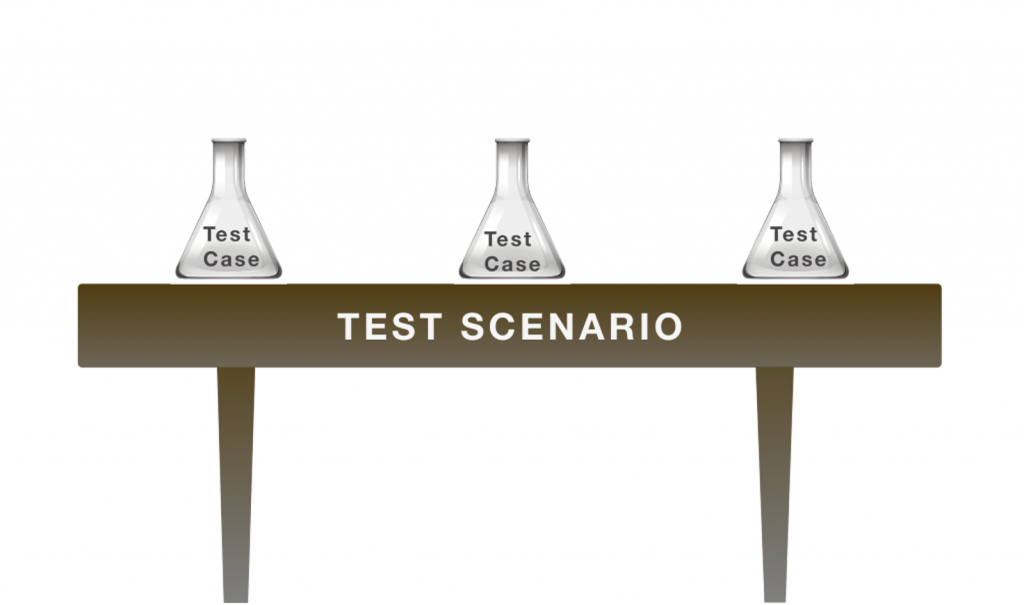

7. Writing test scenarios instead of test scripts:

There are a lot of terms and phrases used in the world of software testing. From a wide range of different testing methods, to a variety of testing types, and many test case templates that make up a software or application test, it can be hard to remember what exactly each term means. We like to keep things simple at Argil DX and just focus on the core of testing. Take for example “Test Cases” and “Test Scenarios”; what’s the difference and why are they needed?

In brief, a Test Scenario is what to be tested and a Test Case is how to be tested. Moreover, a test scenario is supposed to be a collection of test cases.

How does it help?

Test Scenario

The purpose of writing test scenarios is to test the end-to-end functionalities of a software application, to ensure the business processes and flows are functioning as needed. In scenario testing, the tester thinks like an end-user and determines real-world scenarios (use-cases) that can be performed. Once these test scenarios/use cases are determined, then these test scenarios can be converted into test cases for each scenario. Test scenarios are the high-level concept of what to test, covering the major functionality of any module of a project.

Test Case

The test cases are a bunch of steps to be executed by a tester in order to validate the test scenario. Wherein test scenarios are derived from use cases, the test cases are derived and written from those test scenarios. A test scenario can have multiple test cases associated with it, because test cases lay out low-level details on how to test those scenarios.

Example

Test Scenario: Validate the login page of a certain application.

Test Case 1: Enter a valid/invalid username and password

Test Case 2: Reset your password or click on the reset password CTA. (Call to Action – Button)

Test Case 3: Enter invalid credentials in both the fields

So, these are the seven best QA testing practices that we follow at Argil DX.

Read about the implementation of Software Testing Life Cycle (STLC) here. You might also be interested in reading our Testing Guide for improving website performance. For any queries related to software QA testing principles or performance testing and enhancement, reach out to us. We’d be glad to help you!